3D-POP – using AI to study animal behaviour

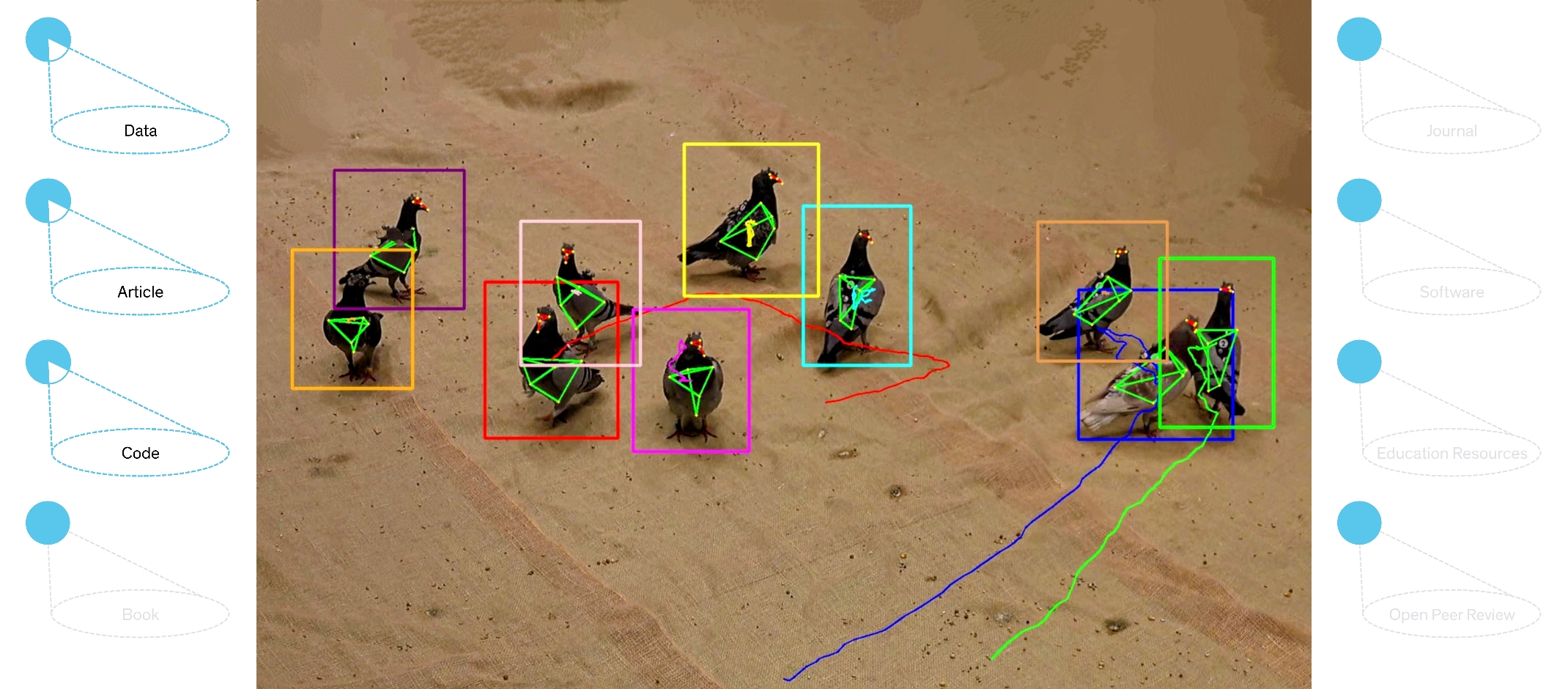

Obtaining information about the posture of animals and their movements in three-dimensional space from video recordings without having to tag the animals or laboriously evaluate every single picture? Advances in artificial intelligence (AI) and computer vision are making just that possible. However, in order for an AI to identify the behaviour of animals, it must first "learn" exactly what that behaviour looks like. This requires extensive training data that show the animals in a variety of possible situations and from different angles and reliably describe the captured behavior. Experts call this annotated data.

An open training dataset – 3D-POP (short for 3D-Posture of Pigeons) – consisting of about 300,000 annotated individual images of freely moving pigeons has now been made available by Alex Chan and Hemal Naik from the Cluster of Excellence "Centre for the Advanced Study of Collective Behaviour" at the University of Konstanz. For each image, the dataset contains information on the animals’ postures, movement trajectories, and identities. In their accompanying publication, the researchers also describe a method for creating large annotated datasets such as 3D-POP in a semi-automated way.

The article on the semi-automated production of the 3D POP dataset is available open access.

The annotated training data 3D-POP (doi: 10.17617/3.HPBBC7) can be downloaded for free from the Max Plank Society Research Data Repository (EDMOND).

For an implementation of the annotation pipeline “3D-POP-AP” visit the corresponding open code repository on GitHub.